Building AI-Native Orgs

Learn more about the HyperTrail platform and discover how it transforms business operations and customer experience across industries.

Popular articles

Ambient Agents in 60 Seconds

Think of an ambient agent as an always‑on co‑worker that sleeps until something important happens. Ambient Agents wait for a trigger, an asynchronous event emitted by one of the 100+ systems and applications that keep your business running (a shipment delay, an abandoned cart, a spike in vibration on a pump). They receive the trigger and immediately ask "why did you wake me", requesting the relevant context - who/what/when/why - around the event trigger. Context is what fuels agentic reasoning, allowing the agent to decide how to respond, and take actions. It uses the context to apply rules and policies, choose the best outcome, and take actions across systems and applications. Done right, fleets of ambient agents can automate thousands of micro‑decisions every minute, lifting revenue, lowering cost, and delighting customers without dashboards or tickets.

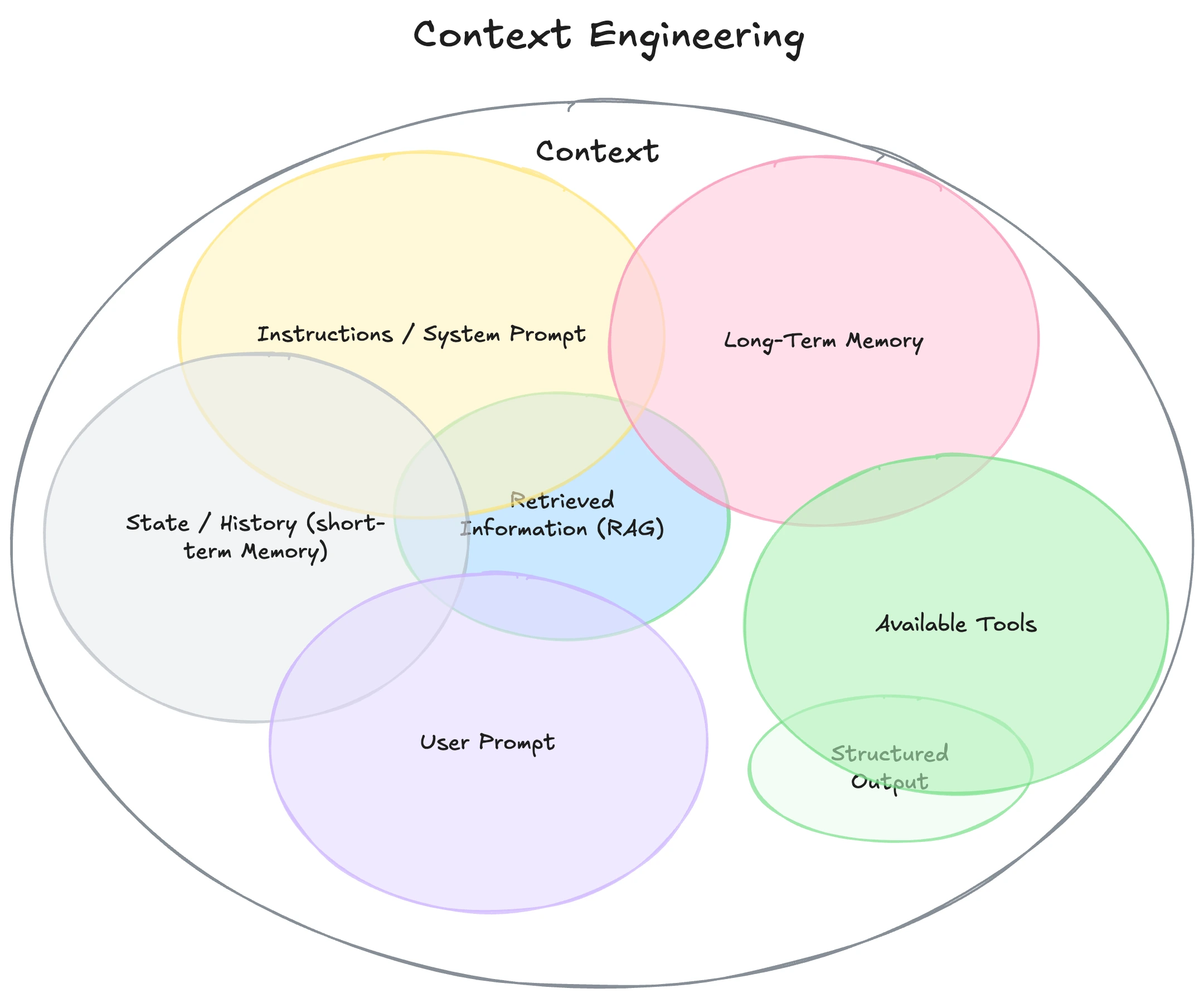

Why Context Is the Fuel

Inside an enterprise, context is the real time understanding of every entity (customer, order, asset, claim) and its ever‑changing state, but context doesn’t just mean “more data”; it means standardized data that arrives in a form the agent can trust. Every source system maintains a fraction of the data that represents a customer or an asset, and each speaks its own dialect - different time stamps, currencies, and identifiers. The most effective way to build real time context is to abstract it from the 100's of systems and applications and store it as digital representations of business objects, digital twins, that are continuously enriched by the same streaming events that trigger ambient agents. Digital twins resolve the data fragments and dialects into a single semantic model so “Order 123,” “INV‑123,” and “SalesDoc 123” all collapse into the same digital twin and represent the state of the entity "right now" in a normalized shared vocabulary. By presenting information in this uniform shape, you eliminate the brittle, ad‑hoc mapping logic that makes agent reasoning unpredictable.

Standardization also acts as lossless compression for the agent’s context window. Irrelevant columns, NULL‑filled placeholders, and verbose XML blobs are stripped away; what remains is a tight, task‑specific payload that can be ingested, reasoned over, and acted upon in milliseconds. The result is higher decision accuracy, lower token costs, and a dramatic reduction in hallucinations because the agent sees only the facts that matter, expressed in the language it understands best. No context → no reasoning → no action. That’s why most organizations that pilot LLMs quickly hit a wall: the model is fine; the context is missing.

5 reasons your pilot will hit a wall:

1 · Your Data Pipeline Is Still a Batch Conveyor Belt

If it takes 15 minutes (or 15 hours) for an event to reach your analytics stack, your agent will wake up too late. Agents need sub‑second access to freshly ingested events.Think Kafka topics or Kinesis streams feeding a low‑latency store.

Consequence: Decisions lag, customer experiences feel retro, and ROI shrinks to vanity demos.

2 · You Don’t Have a System of Reference

Digital twins unify data from 100's of disconnected systems into a single source per entity. Without that layer you’re left with ETLs and joins across CRM tables, IoT feeds, and data‑lake buckets, each with its own keys, clocks, and null values.

Consequence: Agents reason on blurry or conflicting data, leading to false positives, missed upsells, or, worse, customer‑trust debacles.

3 · Context Expires Faster Than You Can Query

A customer may switch from “browsing” to “ready‑to‑buy” in seconds; an engine can move from “healthy” to “critical” in two sensor ticks. If your architecture can’t answer “What was true for this twin 500 milliseconds ago?”, you can’t compute real‑time deltas or trend velocity.

Consequence: Agents become reactive log‑parsers instead of proactive decision‑makers.

4 · Outcome Measurement is Retrospective

Business metrics are the feedback loop that provides teams with the data and insights necessary to build a comprehensive understanding of how their assumptions and actions affect customer behaviors and suffer the same dislocation and fragmentation.

Consequence: Agents can't work towards outcome goals hindering agentic improvements.

5 · Integration Work Is Still Hand‑Cranked

Each new data source spawns a multi‑week cycle of scoping, mapping, and ETL scripting. When a vendor ships a minor API update, the process starts over. At scale, this “integration drag” drains engineering capacity and turns real‑time ambitions into backlog items.

Consequence: By the time your context graph covers 80 % of entities, the first 20 % is already stale.

Diffusing these data/context readiness bombs:

HyperTrail was built as an Agentic OS with context management. Streaming‑native ingest with self‑generating connectors brings all your source systems and data together. EntityDB stores each business object and versions every fact at write time, and provides agents with millisecond "state-as-of-now" context within and across digital twins. Outcomes are at the same level as the data, embedded in the digital twin that is used to instruct how the agent should reason, decide, and act. Automated schema evolution so integrations stay evergreen.

Whether you adopt HyperTrail or roll your own, fixing these gaps is non‑negotiable. The next wave of AI productivity will belong to enterprises that treat context as first‑class data; arriving in time, shaped by twins, governed by code, and perpetually integration‑ready.

Miss those prerequisites, and your ambient agents will stay exactly that: ambient, dormant, and delivering zero value.